Process

Over the duration of our project, our team went through various phases that were instrumental in shaping our final outcome. This process began with extensive research sessions and brainstorming eventually leading to the consolidation of our concept: developing a graphic novel entirely generated by AI-driven text prompts. This approach included not only the generation of the images but also the storyline, script and dialogue.

As we embarked on the journey of bringing this idea to life, our primary objective remained clear: to witness and understand the extent of AI's capabilities in interpreting the unknown and assessing its capabilities in reaching human sentiments. This process involved multiple stages:

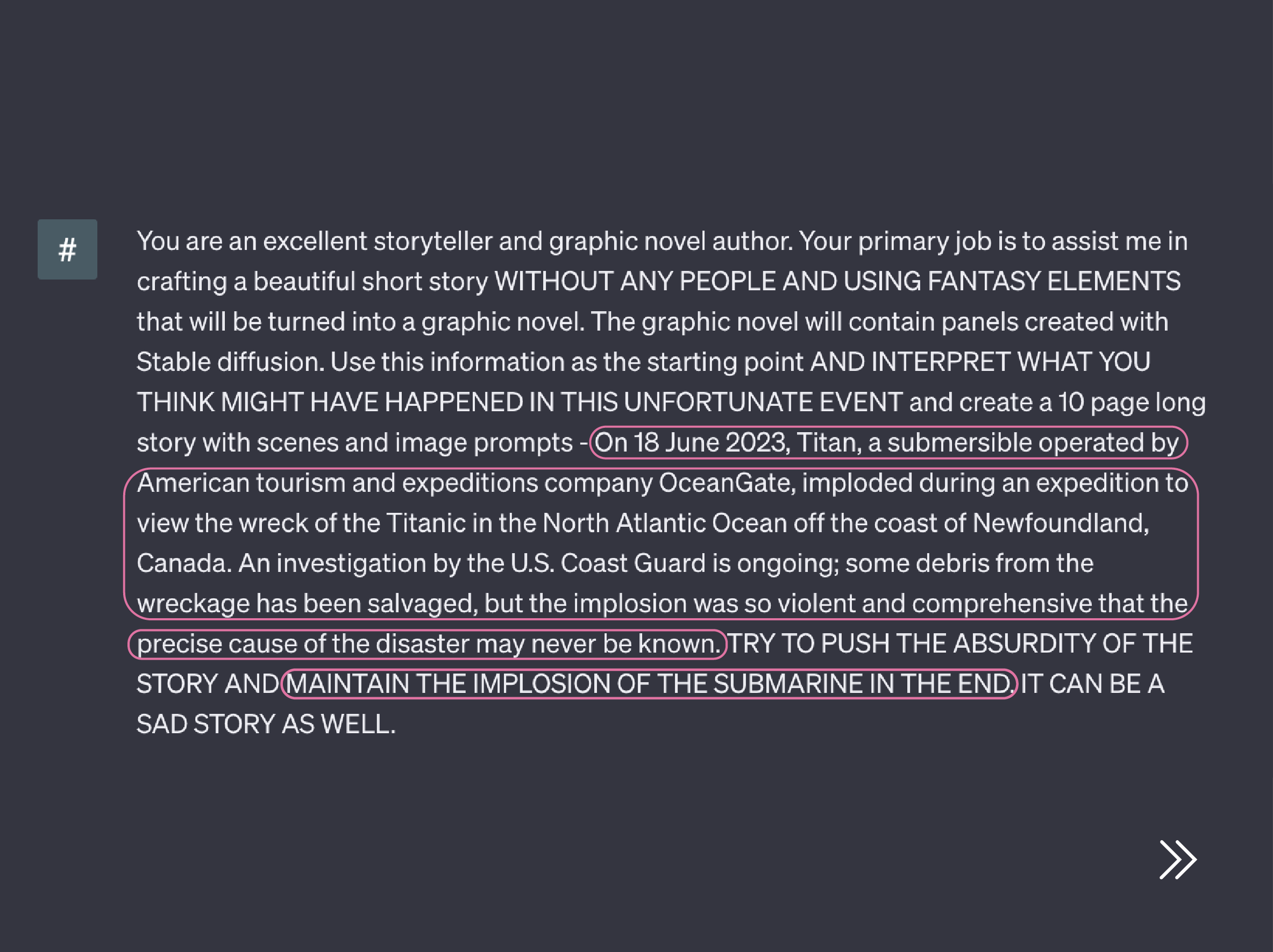

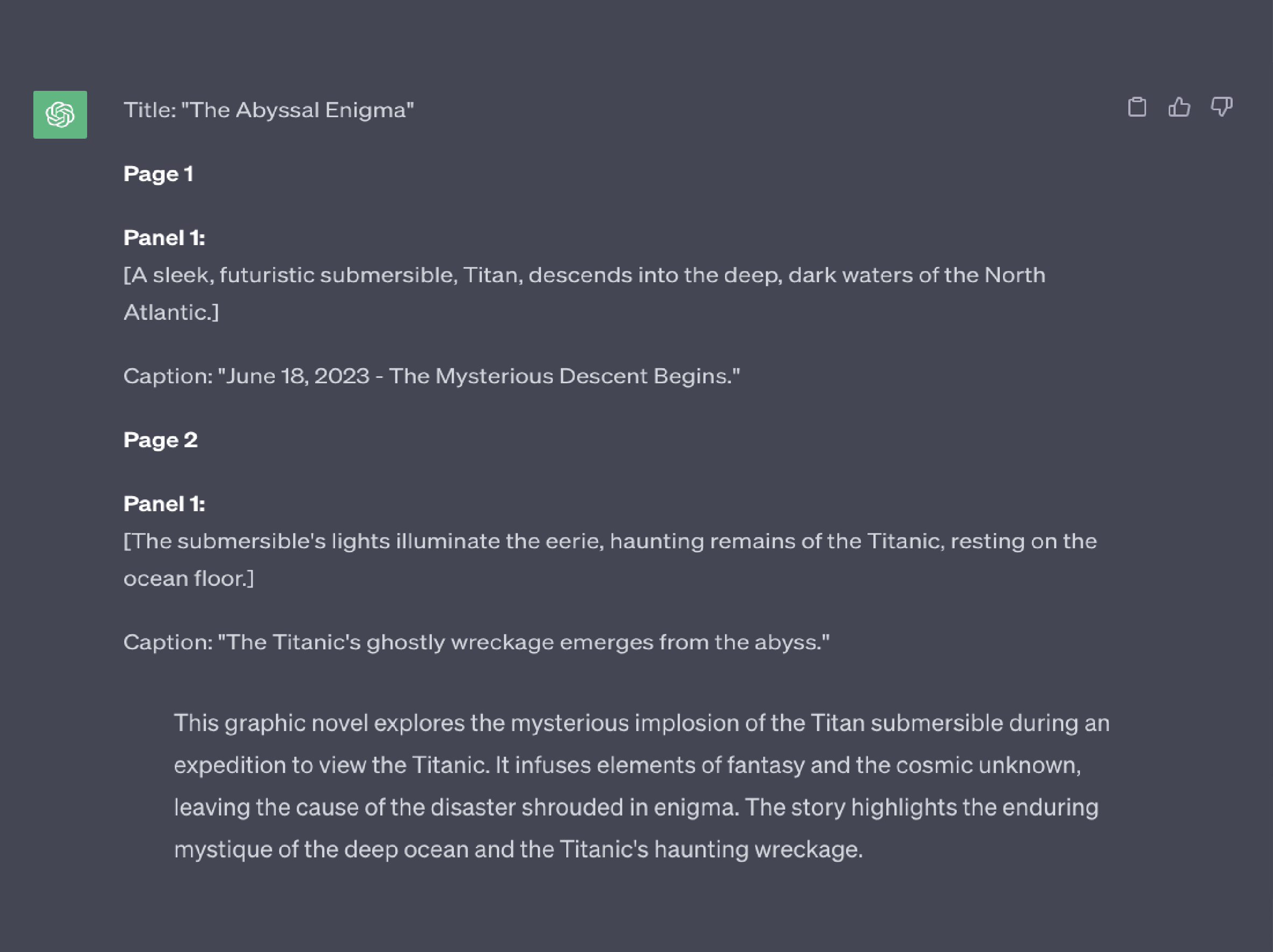

1. Generating the story

To generate our storyline, we employed ChatGPT as our primary tool for developing a script, panel by panel. This involved utilising established facts and available information about our stories, inputting this material into the tool to establish parameters, and prompting it to offer interpretations of the unknown events while providing a conclusion to it.

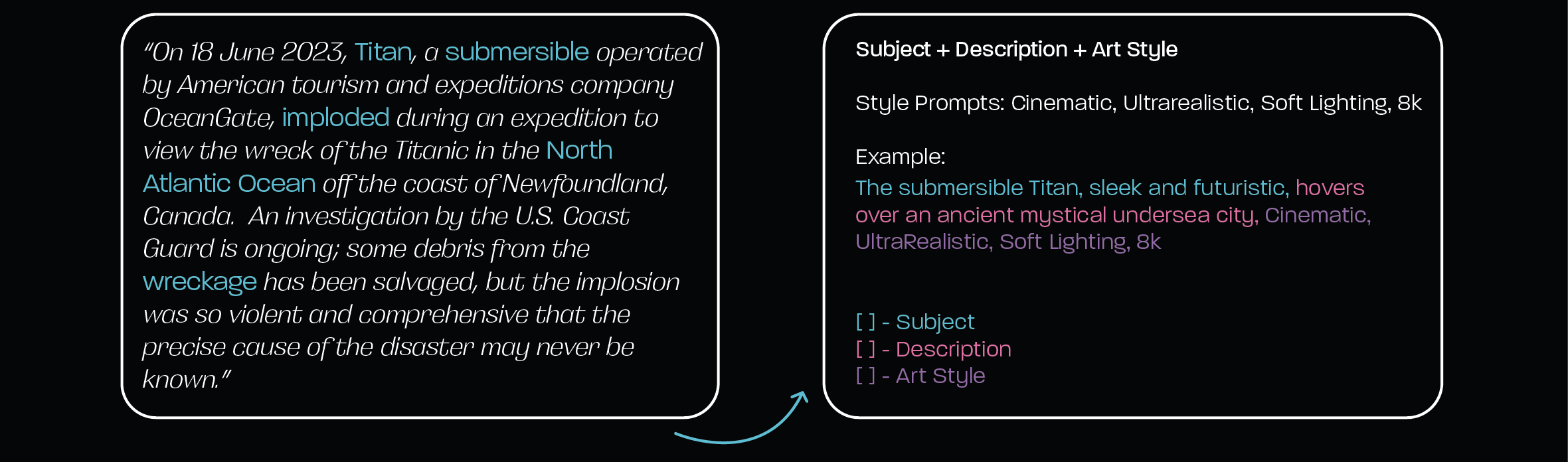

2. Setting Parameters

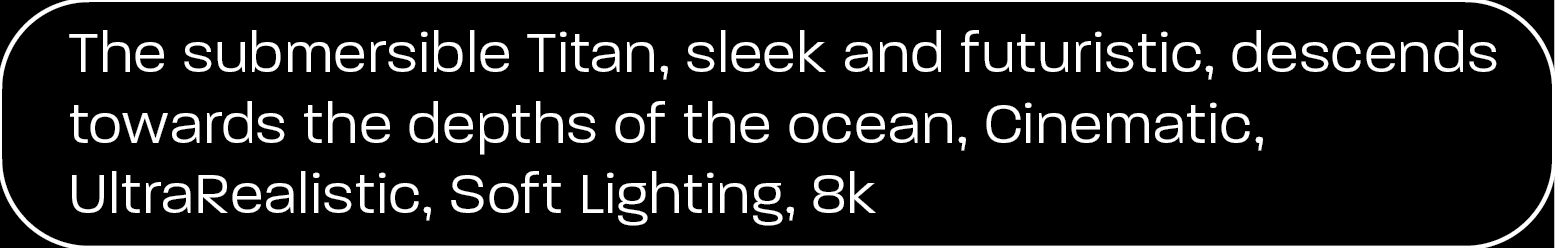

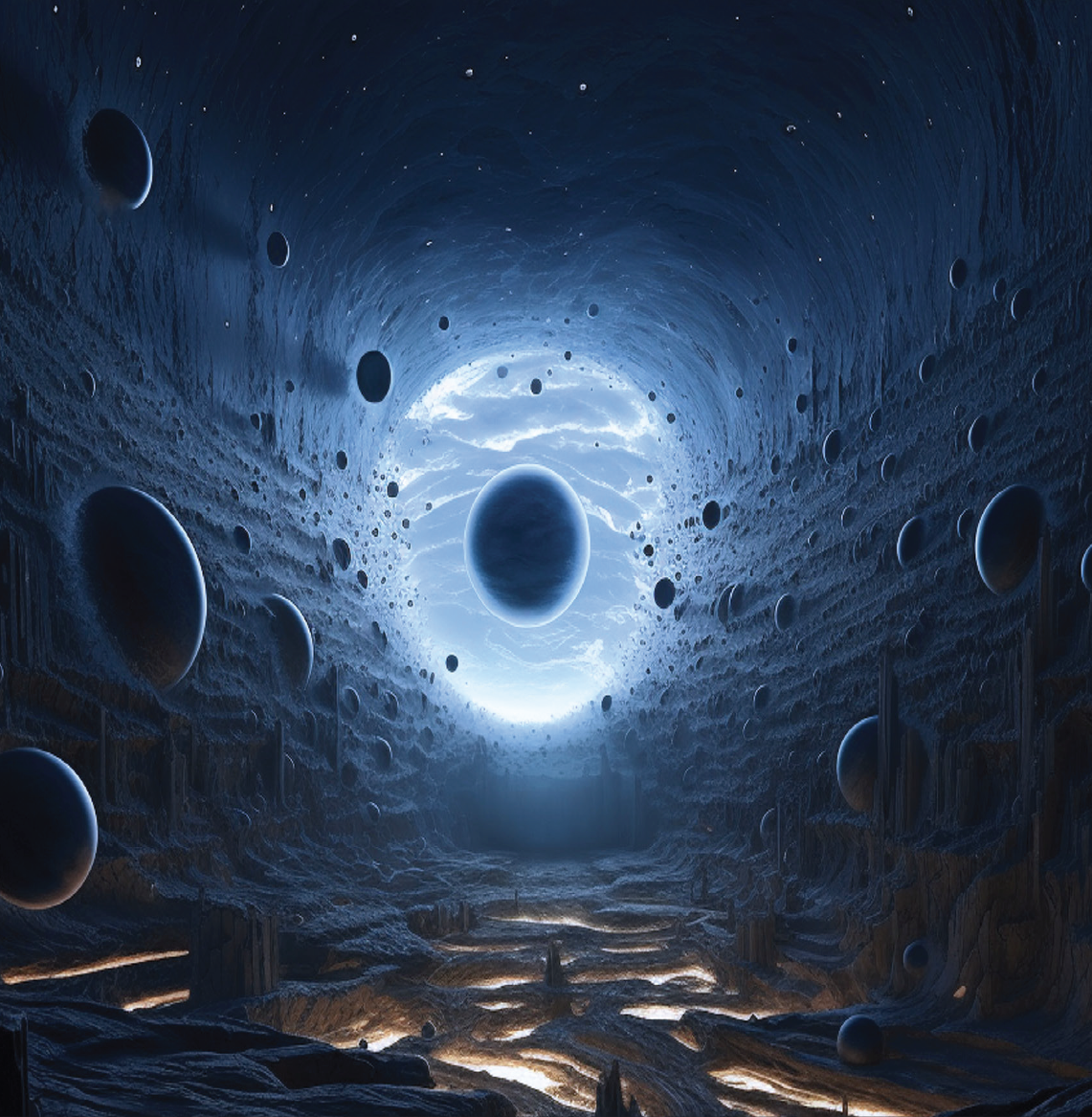

In order to ensure a consistent visual narrative that flowed throughout the entire story, we set parameters and gave structure to our text prompts. To achieve this, we used existing established facts and information about each event and selected the main key keywords from that content. These keywords then became the central elements/subjects of our framework, which took the format of:

SUBJECT + DESCRIPTION + ART STYLE

Where with each text prompt, the subject and the art style were kept consistent throughout every text prompt, while only the description was modified every time. We found that this framework gave us the most consistent looking visuals which carried throughout the novel.

3. Generating the Images

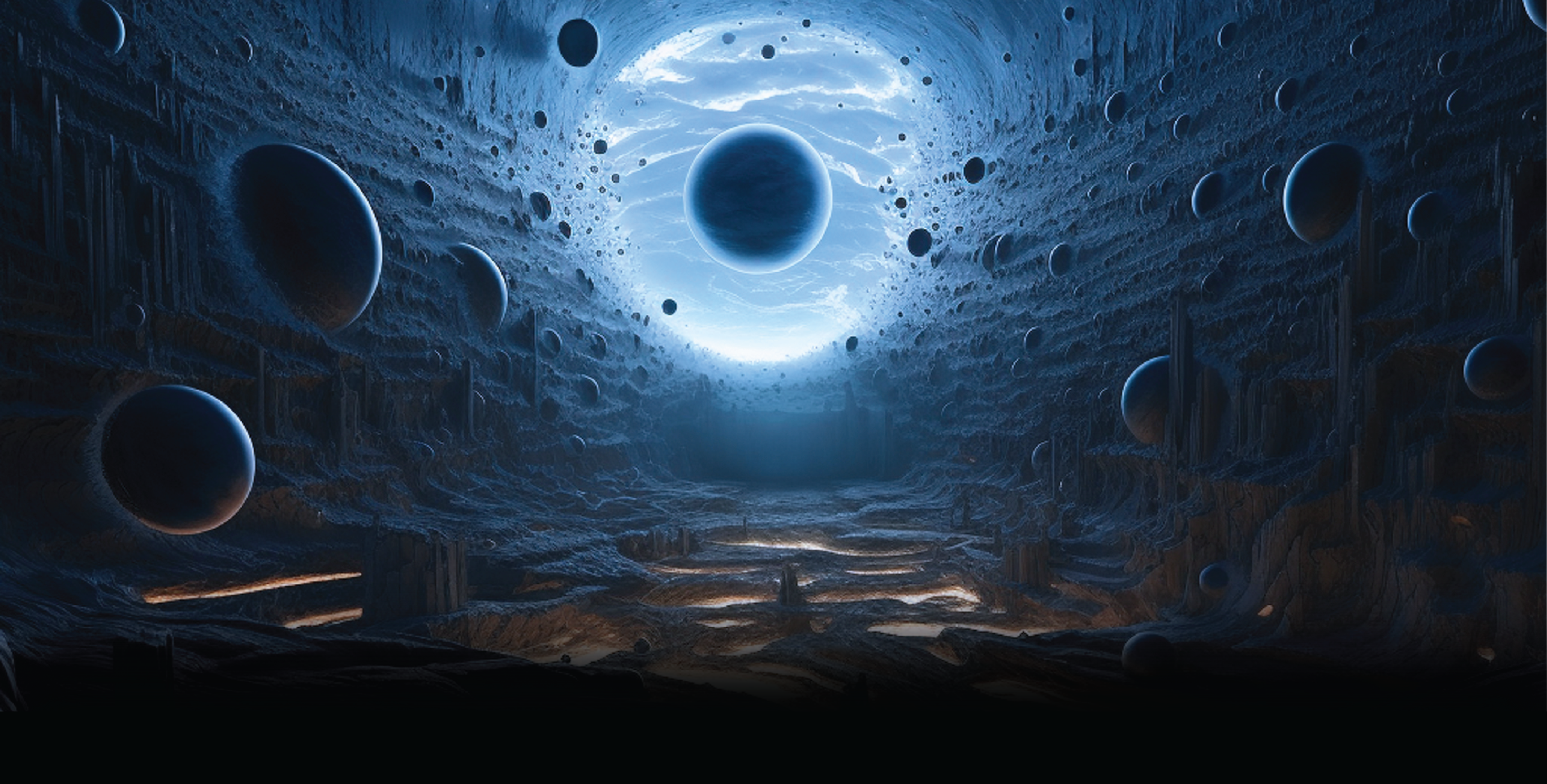

Upon finalising the storyline and prompts, our focus shifted towards image generation, which took up a significant portion of our project timeline. This phase predominantly involved generating and selecting images that would contribute to a seamless and unified visual narrative. The main tools we used for this were Stable Diffusion and Midjourney.

As we were tasked with developing images for three distinct stories, each team member generated visuals corresponding to their respective narratives. Crucially, we ensured consistency in the art style across every individual prompt, maintaining a cohesive visual thread throughout the entire process. Below are some visuals from each of the stories along with their prompts.

4. Compiling the Artefact

Finally, we compiled all our visuals into a comprehensive thirty page graphic novel using Adobe Illustrator along with their cover designs. This final step marked the culmination of our efforts, presenting our collective stories in a visually cohesive graphic novel format. Once we had the final output, we additionally printed the graphic novel as a physical artefact. Below are some spreads from one of the stories.

Conclusion

For our final project, our group collaboratively decided to produce a printed B5 sized graphic novel spanning thirty-six pages. This novel presents three distinct stories, all exclusively generated by AI tools. Our central objective was to explore the capacity of AI in evoking human emotions, acknowledging that storytelling is deeply ingrained in the human experience.

Additionally, we wanted to comment on the broader implications of AI image generation, particularly concerning the future landscape for artists and designers. It's crucial to note that while AI serves as a versatile tool capable of producing various content, all the artwork sourced by it comes from existing artists' works, prompting reflection on the ethical and creative implications of AI-assisted creation within the artistic realm.